Project

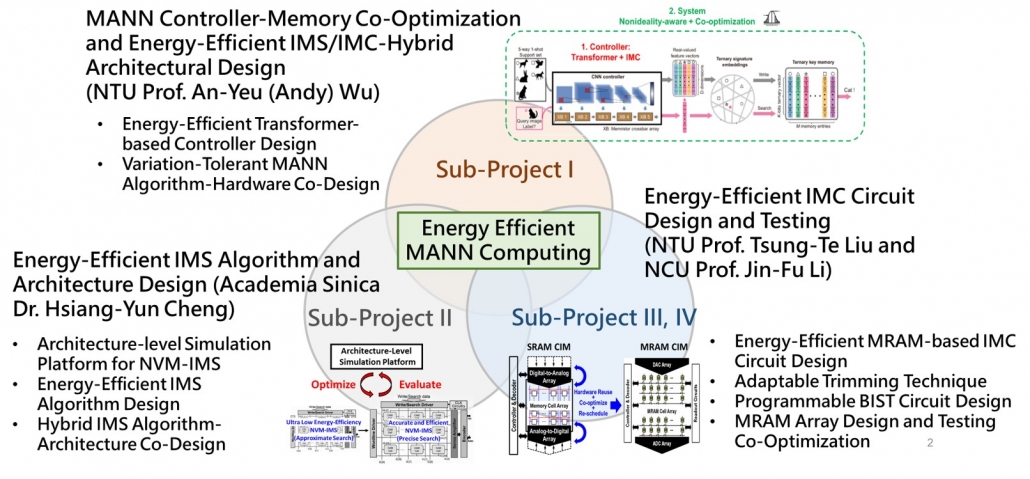

AI Architectures and Circuits for High-efficiency Memory-Augmented

Neural Networks (MANN)

PI

Co-PI

Parasitic-free stacked nanowires combined with 3D MTJ technology:

Prof. Chee Wee Liu’s group presented their achievement on high-stack, high-mobility channel nanowires at the 2021 VLSI Symposia on Technology and was selected as a Highlight Paper. The drive current set a world record for germanium/silicon three-dimensional transistors (>4000 μA/μm @ VOV=VDS=0.5V) [1]. This breakthrough was featured in the prestigious international journal, Nature Electronics, under Research Highlight, and was also published in NTU News and NTU HIGHLIGHTS.

3D MTJ technology:

Prof. Chee Wee Liu’s group demonstrated the spin-orbit-torque magnetic tunnel junction (SOT-MTJ) at the 2021 VLSI Symposia on Technology and was selected as a Highlight Paper. They achieved thermal stability up to 400°C by using tungsten as a multi-layered diffusion barrier. Additionally, they utilized tantalum tungsten as the spin-orbit torque channel, enabling a high spin Hall angle. This broadened the process window for device etching. Furthermore, they demonstrated the realization of zero-field switching using the Spin-Transfer Torque (STT) effect [2]

High-performance and high-resolution computing-in-memory system:

The high-performance and high-resolution computing-in-memory (CIM) system developed by Professor Liu’s team addresses the issue of redundant circuit operations between the memory array and the digital and analog conversion interface. Sub-project 2 introduces a novel CIM Cell design, integrating four key functions: 1) essential memory Read/Write, 2) Multiply-Accumulate (MAC), 3) the core function of Analog-to-Digital Conversion (ADC), and 4) reference voltage (Vref) generation and SAR capacitor switching. This collaborative and optimized CIM network design eliminates the need for a separate and expensive ADC to convert the MAC result into digital codes on the bit line. Instead, the ADC operation is efficiently integrated into the memory array, significantly reducing redundant energy and area consumption. As a result, the energy efficiency of the CIM Macro level is substantially improved, reaching an impressive 383 TOPS/W. These significant findings have been published in the prestigious IEEE Journal of Solid-State Circuits (JSSC) [3].

Development of High Energy Efficiency AI Computing Architecture and Algorithms

Prof. An-Yeu (Andy) Wu’s research team published the paper “Trainable Energy-aware Pruning (T-EAP)”, which won the Best Student Paper Award at the IEEE AICAS (AI for Circuits and Systems) in 2022 [4]. This technology uses the energy information simulated by the DNN+NeuroSim platform developed by the Georgia Tech team. It uses the energy consumption information provided by DNN+NeuroSim to prune weights with higher energy consumption during the pruning process of DNN, aiming to achieve the best accuracy-energy performance in the literature.

Based on T-EAP, the team further proposed “E-UPQ: Energy-Aware Unified Pruning-Quantization Framework”, where trainable parameters undergo differentiable pruning/quantization joint search during the compression process. Compared with the full-precision model, the CIM energy-aware compression technology proposed by the team increased the energy efficiency (TOPS/W) of the ResNet-18 and VGG-16 models running on the CIM platform by four times. This work has been published in the IEEE Journal on Emerging and Selected Topics in Circuits and Systems [5].

Reference:

[1] Y. -C. Liu et al., “Highly Stacked GeSi Nanosheets and Nanowires by Low-Temperature Epitaxy and Wet Etching,” in IEEE Transactions on Electron Devices, vol. 68, no. 12, pp. 6599-6604, 2021.

[2] Y. -J. Tsou et al., “Thermally Robust Perpendicular SOT-MTJ Memory Cells With STT-Assisted Field-Free Switching,” in IEEE Transactions on Electron Devices, vol. 68, no. 12, pp. 6623-6628, 2021.

[3] C. -Y. Yao, T. -Y. Wu, H. -C. Liang, Y. -K. Chen and T. -T. Liu, “A Fully Bit-Flexible Computation in Memory Macro Using Multi-Functional Computing Bit Cell and Embedded Input Sparsity Sensing,” in IEEE Journal of Solid-State Circuits, 2023.

[4] C. -Y. Chang, Y. -C. Chuang, K. -C. Chou and A. -Y. Wu, “T-EAP: Trainable Energy-Aware Pruning for NVM-based Computing-in-Memory Architecture,” IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), pp. 78-81, 2022.

[5] C. -Y. Chang, K. -C. Chou, Y. -C. Chuang and A. -Y. Wu, “E-UPQ: Energy-Aware Unified Pruning-Quantization Framework for CIM Architecture,” in IEEE Journal on Emerging and Selected Topics in Circuits and Systems, vol. 13, no. 1, pp. 21-32, 2023.